Modern buildings rely on thousands of data points to keep people comfortable, safe, and productive. From temperature readings and air quality sensors to elevator positions and occupancy lighting, these systems are only as effective as the information they receive.

But what happens when that information is wrong?

When Bad Data Breaks Smart Buildings

Across hospitals, airports, office towers, and labs, we know that subtle data integrity issues—drifting sensors, corrupted packets, mismatched configurations—can cascade into unplanned disruptions. HVAC systems overcorrect. Lights stay on all night. Elevators grind to a halt. In each case, the technology was in place, but the data behind became flawed.

Lets take a high-level look at some real-world examples that reveal the hidden cost of poor data integrity in operational technology (OT) networks—and how forward-thinking organizations responded. From better sensor validation to network-wide monitoring, their responses offer valuable lessons for anyone managing complex building systems.

Jump to story

- Seattle Children’s Hospital (2019)

- 432 Park Avenue, New York City (2018)

- Imperial Valley (California, 2017)

- Oldsmar Water Treatment Facility (2021)

- Millstone Nuclear Power Plant (2005)

- Google Wharf 7 Office, Sydney, Australia (2013)

- Immaculata Benedictine Sisters Monastery, Norfolk, Nebraska (2011)

Seattle Children’s Hospital (2019)

Organization: Seattle Children’s Hospital is a nationally recognized pediatric medical center offering specialized surgical and critical care services. The hospital relies on advanced HVAC and building automation systems to maintain sterile conditions in operating rooms.

What Happened: Between 2001 and 2019, patients undergoing heart surgery were exposed to Aspergillus, a common but potentially deadly mold, in operating rooms that were presumed sterile. Despite repeated incidents, the root cause remained unresolved for years.

OT Data Integrity Failure: Investigations revealed that the hospital’s HVAC systems were not maintaining appropriate air filtration or pressurization in critical surgical environments. This failure in environmental controls pointed to a breakdown in OT data integrity—specifically, the systems either failed to report or responded inaccurately to conditions that allowed contaminated air into sterile zones. Key performance thresholds were either not properly monitored or not acted upon by the automation system.

Ramifications: The mold exposure was linked to the deaths of at least seven patients. In addition to the tragic loss of life, the hospital faced growing public scrutiny and legal action. In 2024, Seattle Children’s agreed to pay $215,000 to settle claims from affected families, though broader reputational damage and trust issues persist.

Resolution: The hospital implemented extensive upgrades to its HVAC and environmental monitoring systems. This included replacing air handling units, improving HEPA filtration, and introducing more rigorous maintenance and testing protocols. New procedures were also put in place to validate air quality data and ensure early detection of potential air integrity failures.

Source: Hospital To Pay $215,000 Over IAQ Problems

432 Park Avenue, New York City (2018)

Organization: 432 Park Avenue is a luxury residential skyscraper in New York City, housing some of the most expensive condos on Park Ave. Standing 1,396 feet tall with 96 stories and marketed as ultra-premium real estate, the building is home to dozens of high-net-worth individuals.

What Happened: Following occupancy, residents began experiencing persistent mechanical failures. These included frequent elevator shutdowns, major plumbing leaks, and loud structural creaking noises during high winds—unusual for a building of this class and cost.

OT Data Integrity Failure: An independent engineering report found that approximately 73% of the building’s mechanical, electrical, and plumbing (MEP) systems were not constructed according to design specifications. This meant key automation systems—such as water pressure regulators and HVAC control units—were operating based on inaccurate or incomplete data inputs, leading to system misbehavior and cascading failures.

Ramifications: Water intrusion caused damage to at least 35 units, while repeated elevator outages left some residents stranded for extended periods. Structural sounds and vibrations further eroded resident confidence. The situation triggered lawsuits against the developers, engineers, and contractors, alleging poor oversight and negligence in system integration and validation.

Resolution: The building’s management launched a comprehensive remediation plan that included revalidating control system configurations, conducting physical system audits, and initiating repairs to bring mechanical operations in line with original specifications. Additional oversight and QA measures were implemented for ongoing monitoring.

Source: Wikipedia – 432 Park Avenueen.wikipedia.org

Imperial Valley (California, 2017)

Organization: California state and local air-quality agencies monitor environmental conditions across the state, including in Imperial County, one of the most polluted regions in the U.S. The community group Comite Civico del Valle has worked to fill air monitoring gaps and advocate for environmental justice in the region.

What Happened: In 2017, frustrated by what they saw as underreported pollution levels, Imperial County residents began deploying their own portable PM10 sensors to monitor airborne particulate matter. Their readings painted a very different picture from the government’s official air quality reports.

OT Data Integrity Failure: The official PM10 monitors used by government agencies were left with their default configurations when installed, designating an upper limit of 985 µg/m³. This cap—left unchanged for years—meant the monitor could not register or report pollution spikes beyond that threshold. In contrast, community-run sensors documented PM10 levels as high as 2,430 µg/m³ on certain days, revealing that the government system was unknowingly masking extreme pollution events due to the hardware configurations and lack of proactive maintenance.

Ramifications: The capped readings created a misleading picture of air quality, underestimating the health risks faced by residents. Without accurate data, authorities failed to issue appropriate warnings or respond adequately to hazardous conditions. Vulnerable populations, including children and the elderly, remained exposed to harmful pollution levels without timely alerts or protective measures.

Resolution: After community advocates brought their findings to light, state officials acknowledged the issue and raised the upper limit of the official PM10 monitors to 10,000 µg/m³ in 2018. This change ensures that future high-pollution events are properly captured and reflected in official records, supporting better public health decisions.

Source: Special Report: U.S. air monitors routinely miss pollution – even refinery explosions

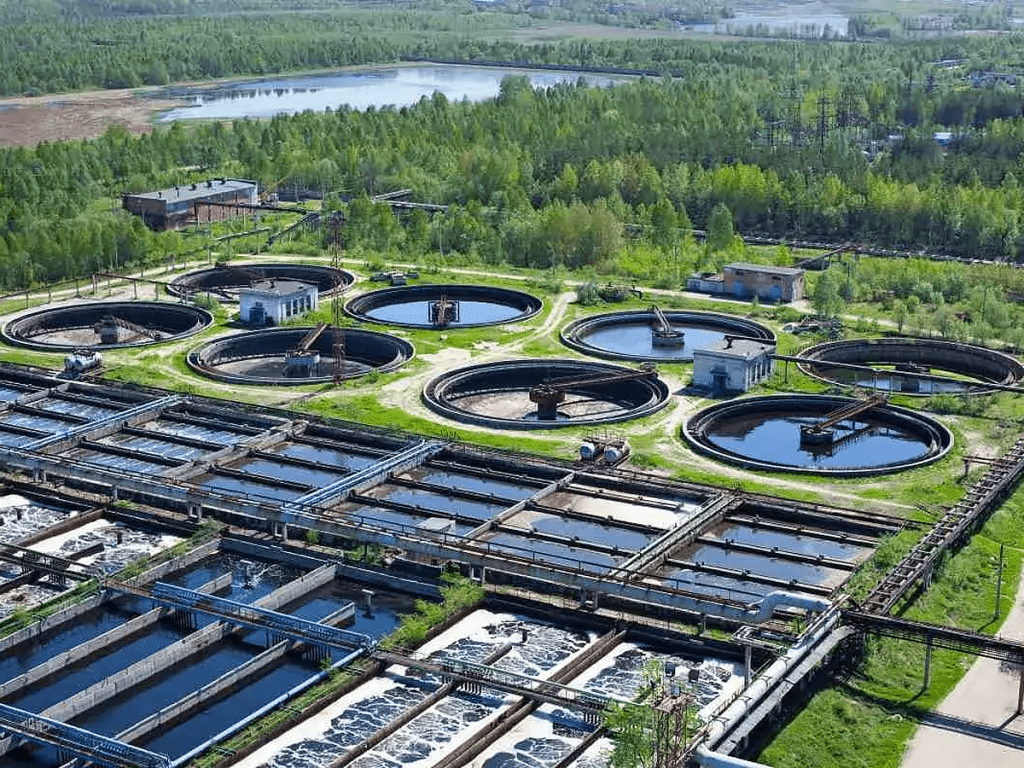

Oldsmar Water Treatment Facility (2021)

Organization: The Oldsmar Water Treatment Facility supplies drinking water to roughly 15,000 residents in Oldsmar, Florida. The facility uses a standard treatment process, including the controlled addition of sodium hydroxide (Lye) to adjust water pH.

What Happened: In February 2021, an operator noticed his mouse cursor moving independently, navigating control systems tied to water treatment. A hacker had gained access, and changed the sodium hydroxide setpoint from 100 parts per million to 11,100—a 100-fold increase that could have created caustic, potentially lethal drinking water.

OT Data Integrity Failure: The key issue was a lack of external safeguards around critical setpoint changes. It’s worth noting that there were several redundancies within the automation system that would have alerted that the levels were too high. Nonetheless, the system accepted and attempted to implement the new dosage without requiring any authentication.

Ramifications: Fortunately, the operator spotted and reversed the change before any contaminated water reached residents. Though the threat was remote, thousands could have potentially been seriously harmed. The attack also exposed broader vulnerabilities in water treatment infrastructure by an admittedly “not particularly sophisticated” attack.

Resolution: The facility added multiple data integrity controls, including multi-operator verification for critical changes. They also overhauled remote access policies and segmented their network to limit exposure.

Source: A Hacker Tried to Poison a Florida City’s Water Supply, Officials Say

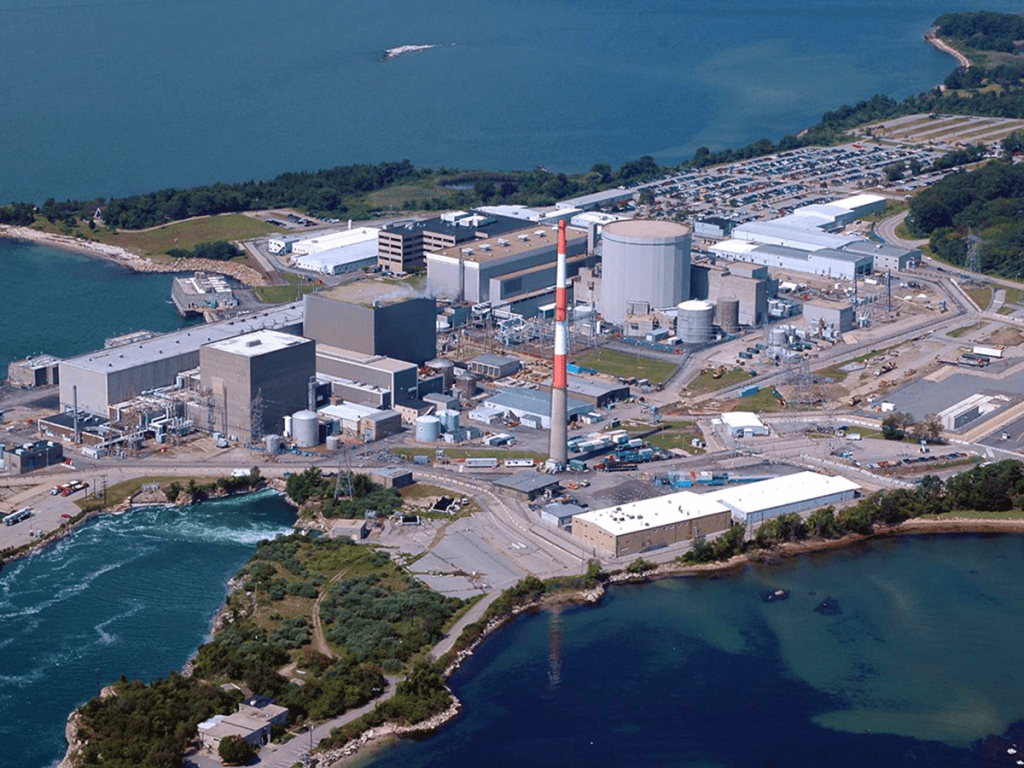

Millstone Nuclear Power Plant (2005)

Organization: Millstone Nuclear Power Station in Waterford, Connecticut, operated by Dominion Energy, consists of multiple reactor units and generates about 2,100 megawatts—enough to power hundreds of thousands of homes across New England.

What Happened: In February 2005, Millstone’s Unit 3 reactor was forced into an emergency shutdown after operators realized a tin whisker caused displaying incorrect reactor coolant levels for an extended period, making it look like a breach in a steam line.

OT Data Integrity Failure: A fault in the data acquisition system caused it to report inaccurate water levels. The verification systems failed to detect the discrepancy, leaving operators unaware of the true state of the coolant system. The condition led safety systems to automatically shut down the reactor, as they are meant to do, and brought the electric generator to a halt.

Ramifications: Although there was no radiation release or immediate danger, that a tin whisker could prevent a critical safety system from operating led the NRC to classify the event as significant. The unexpected shutdown disrupted operations and resulted in costly emergency measures and lost power generation.

Resolution: The company removed, photographed, cleaned and inspected 103 computer monitoring circuit cards at Unit 3 and replaced four that showed signs of tin whiskers. Millstone adopted stricter data verification protocols, including redundant sensor systems and automated cross-checking for critical readings. They also improved software validation procedures to flag inconsistencies more reliably.

Source: Reactor Shutdown: Dominion Learns Big Lesson From A Tiny ‘tin Whisker’

Google Wharf 7 Office, Sydney, Australia (2013)

Organization: Google Australia’s Wharf 7 office is a modern commercial building in Sydney, outfitted with advanced building automation systems to manage climate control, lighting, and other operational technologies.

What Happened: In 2013, security researchers uncovered that the Building’ Management System (BMS) was accessible over the public internet with minimal security protections. The discovery drew immediate concern given the sensitivity and scope of systems tied to the exposed interface.

OT Data Integrity Failure: The BMS, built on the Tridium Niagara AX platform, had not been patched to address known security vulnerabilities. As a result, researchers were able to remotely access control panels that displayed real-time system operations, including heating, cooling, and ventilation. The failure stemmed from both technical misconfiguration and a lack of ongoing maintenance—key lapses in OT data security and integrity.

Ramifications: Although there was no evidence of malicious tampering or physical damage, the exposure underscored how vulnerable even high-profile organizations can be when basic cybersecurity practices are overlooked. Had the access been exploited, attackers could have disrupted building operations or used the system as a foothold for further network intrusion.

Resolution: Following the disclosure, Google took immediate action by severing the BMS from the public internet. The incident prompted renewed attention to the configuration and patch management of integrator-deployed systems. Google emphasized the need for secure deployment practices, including routine updates, password protections, and strict network segmentation.

Source: Researchers Hack Building Control System at Google Australia

Immaculata Benedictine Sisters Monastery, Norfolk, Nebraska (2011)

Organization: The Missionary Benedictine Sisters Monastery is a religious facility that underwent major renovations to modernize its infrastructure, including the installation of updated HVAC equipment and a new building automation system designed to optimize environmental control.

What Happened: Shortly after the remodeled facility reopened, the monastery began experiencing serious indoor climate issues. Despite new systems being in place, the building could not maintain stable humidity levels during warmer months, resulting in widespread operational problems.

OT Data Integrity Failure: The newly installed building automation system failed to properly regulate humidity, particularly during the summer. The BMS system either misread or mismanaged environmental data, leading to excessive moisture throughout the building. This breakdown in OT data accuracy and response contributed to boiler malfunctions, accelerated corrosion of water heaters, building envelope condensation, and the proliferation of mold—clear signs that control logic or sensor calibration was flawed.

Ramifications: The uncontrolled humidity created both health and structural risks for the occupants, undermining the purpose of the renovation. Mold and condensation led to damage within the building envelope, and legal proceedings were initiated to resolve accountability for the system’s deficiencies.

Resolution: Outside experts were brought in to perform a full diagnostic evaluation of the HVAC and control systems. Their assessment identified the root causes of failure and resulted in targeted recommendations to correct the system’s control logic, restore environmental stability, and prevent future humidity-related damage.

Source: HVAC High Humidity Fail

Bank of America Plaza, Atlanta (2017)

Organization: The Bank of America Plaza is Atlanta’s tallest skyscraper, with more than 1.3 million square feet of office space. The building uses a comprehensive automation platform to manage occupancy-based lighting and HVAC schedules across its 55 floors.

What Happened: In 2017, tenants began reporting that lights and air conditioning were operating outside normal hours. Energy use reports revealed that the system was running full-scale overnight and on weekends in some zones—despite the building being mostly unoccupied.

OT Data Integrity Failure: The automation platform’s occupancy schedule was driven by access card data and motion sensors. A software update introduced a bug that corrupted access logs, making it appear as though hundreds of occupants were still present in the building after hours. Motion sensor data, which should have served as a check, was being ignored due to misconfigured fallback rules in the system.

Ramifications: Over several weeks, the building consumed significantly more energy than expected. Operating costs increased by tens of thousands of dollars, and tenants raised concerns about sustainability and security. The incident damaged the building’s LEED performance metrics and required extensive forensic IT support to identify the root cause.

Resolution: Building management partnered with the automation vendor to develop a real-time data validation layer between access control and building automation. They also revised fallback logic to treat motion sensor data as primary in certain conditions and added alerts for data conflicts between subsystems. Software updates were put through a more rigorous QA process with sandbox testing environments to detect similar issues before deployment.

Source: Elevator World: Position Sensing Challenges in Supertall Buildings

Conclusion: Don’t Let Bad Data Undermine Your OT Operations

The cases above are stark reminders that even the most advanced building systems are only as reliable as the data driving them. From fatal air quality failures in hospitals to sky-high energy bills in commercial towers, poor data integrity isn’t just a technical glitch—it’s a root cause of operational, financial, and reputational risk.

Ensuring data integrity means more than checking a few sensor readings. It requires end-to-end visibility across your OT network, the ability to spot anomalies before they escalate, and the tools to validate what’s really happening behind the scenes. That’s where Optigo Visual Networks comes in. With powerful diagnostics, historical context, and intuitive monitoring, OptigoVN gives you the confidence that your data—and your decisions—are built on a solid foundation.

Don’t wait for a data failure to make you take action. Contact us to set up a personalized demo, or create your own account today and explore for free.